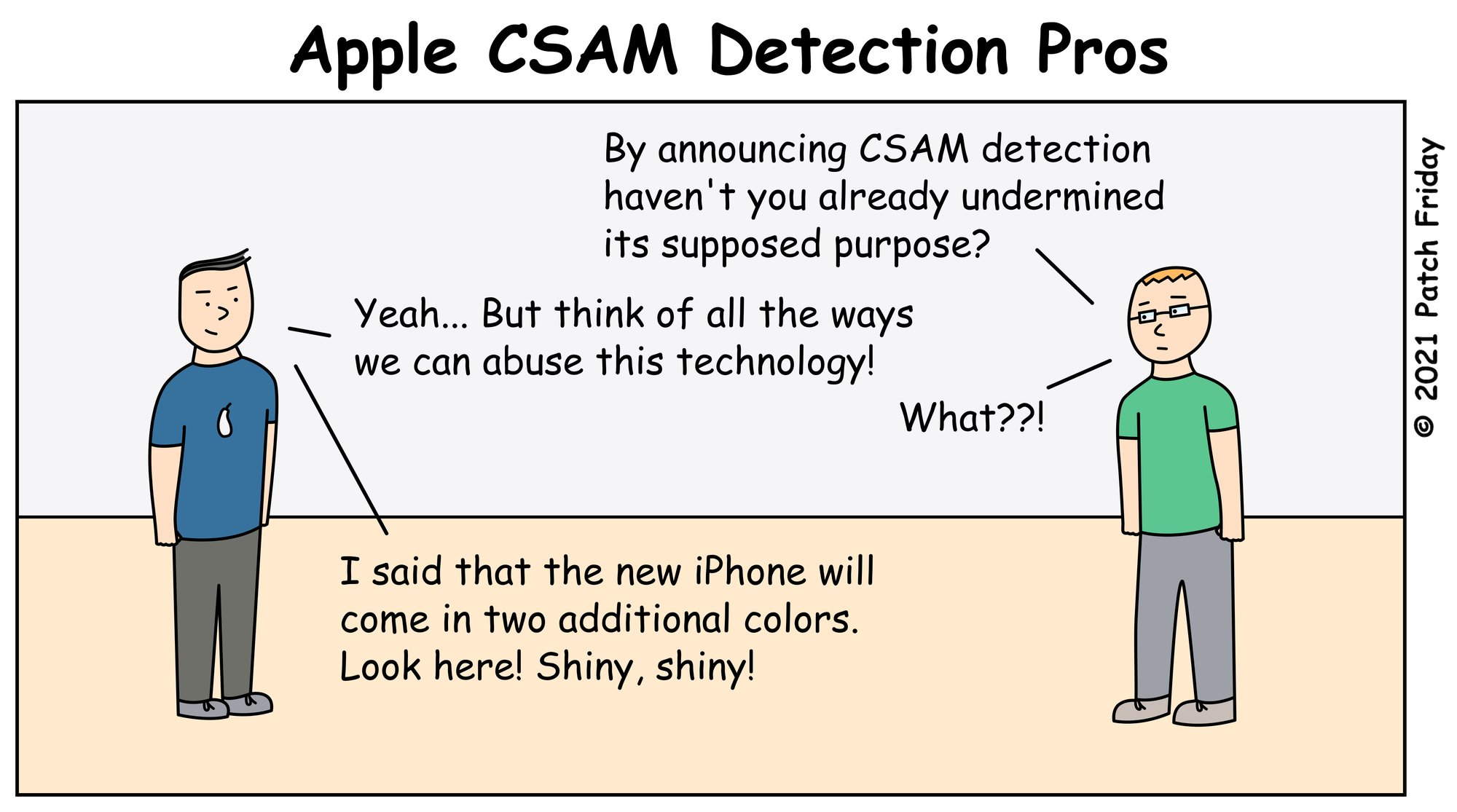

Csam Detection | Patch Friday On Twitter Apple Csam Detection Pros Https T Co Viw0j2tk7n

This database is provided by NCMEC and similar. Bedeutet das dass Fotos nur.

Apple Is Delaying Its Child Safety Features Engadget

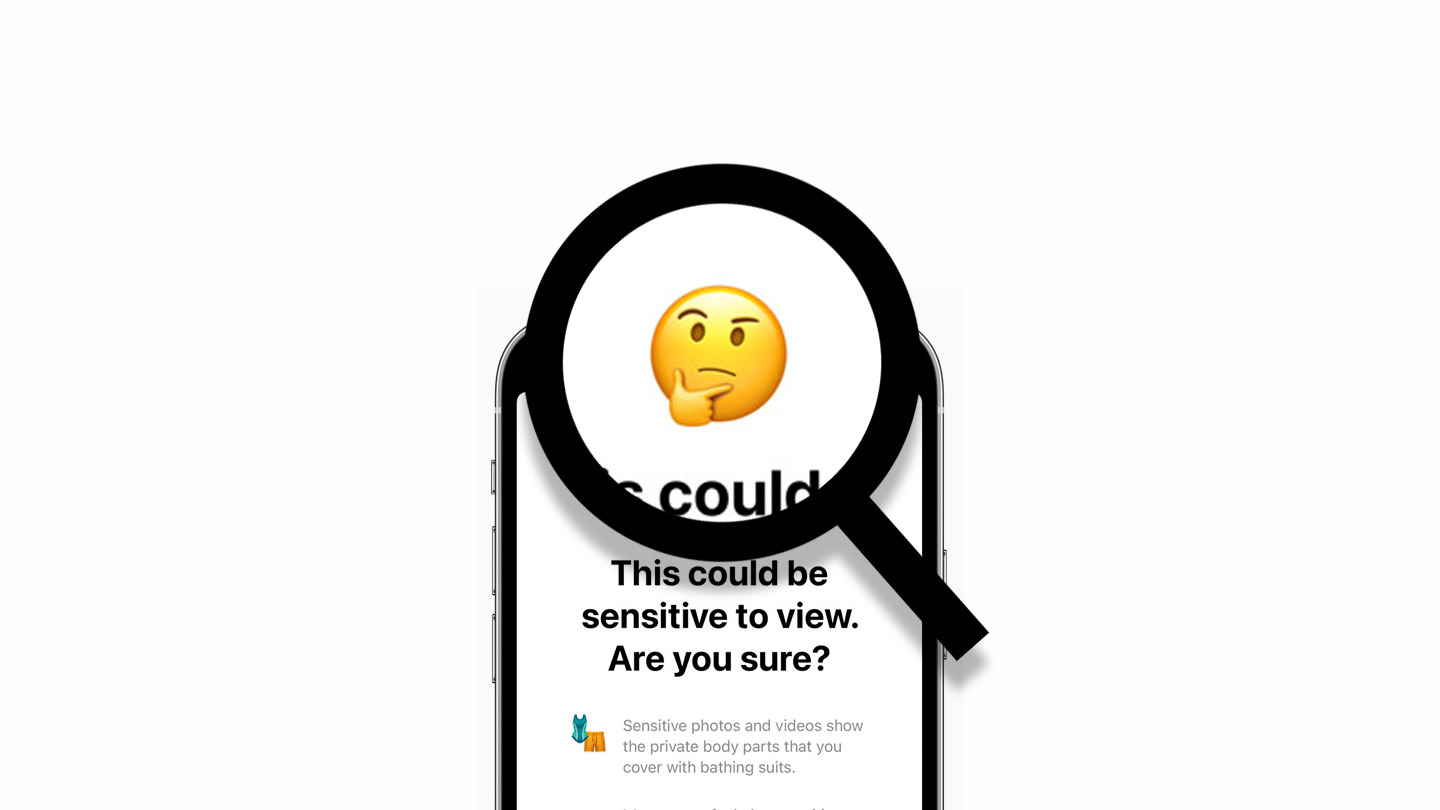

Siri and Search will also intervene when users try to search for CSAM-related topics.

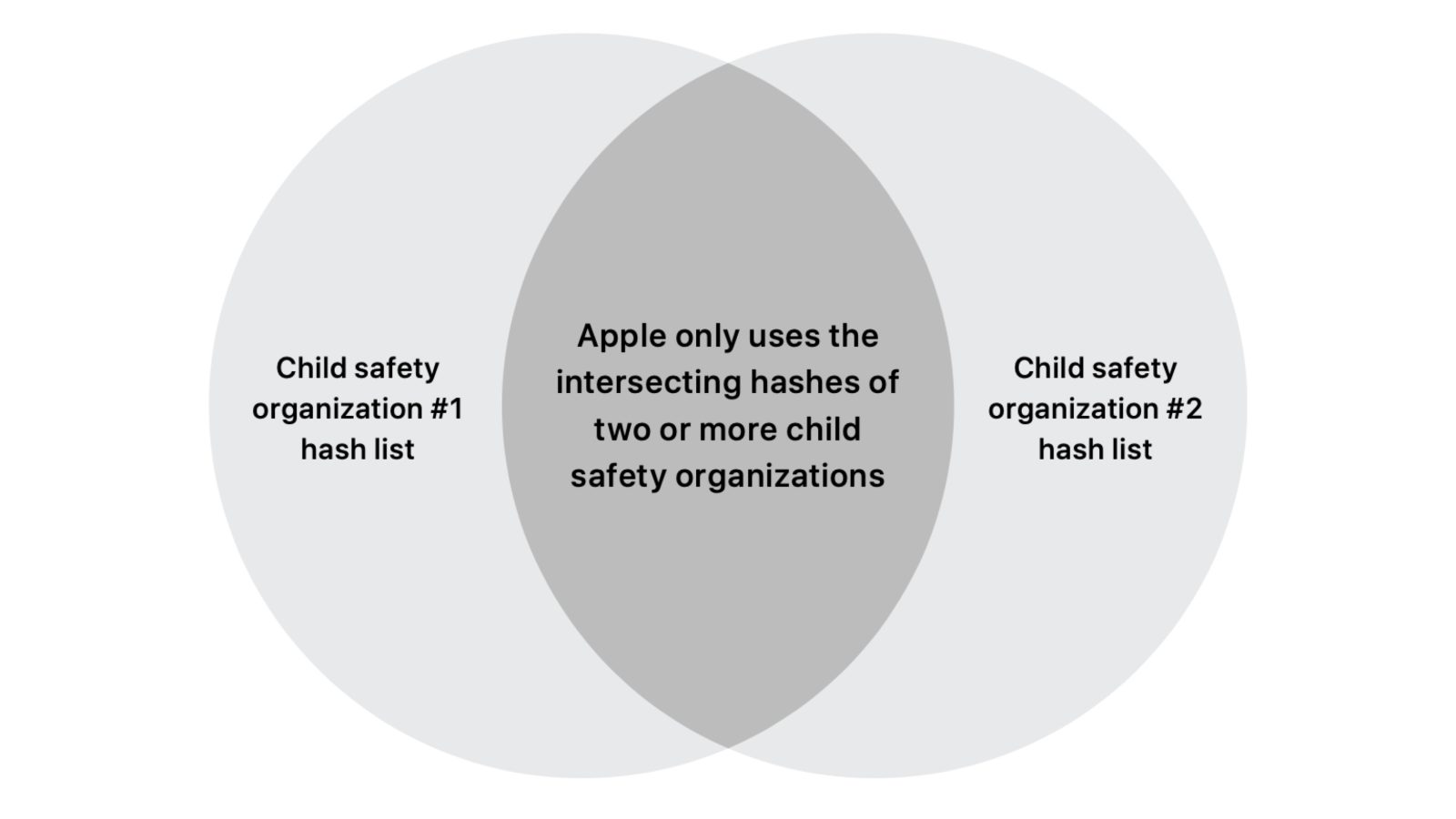

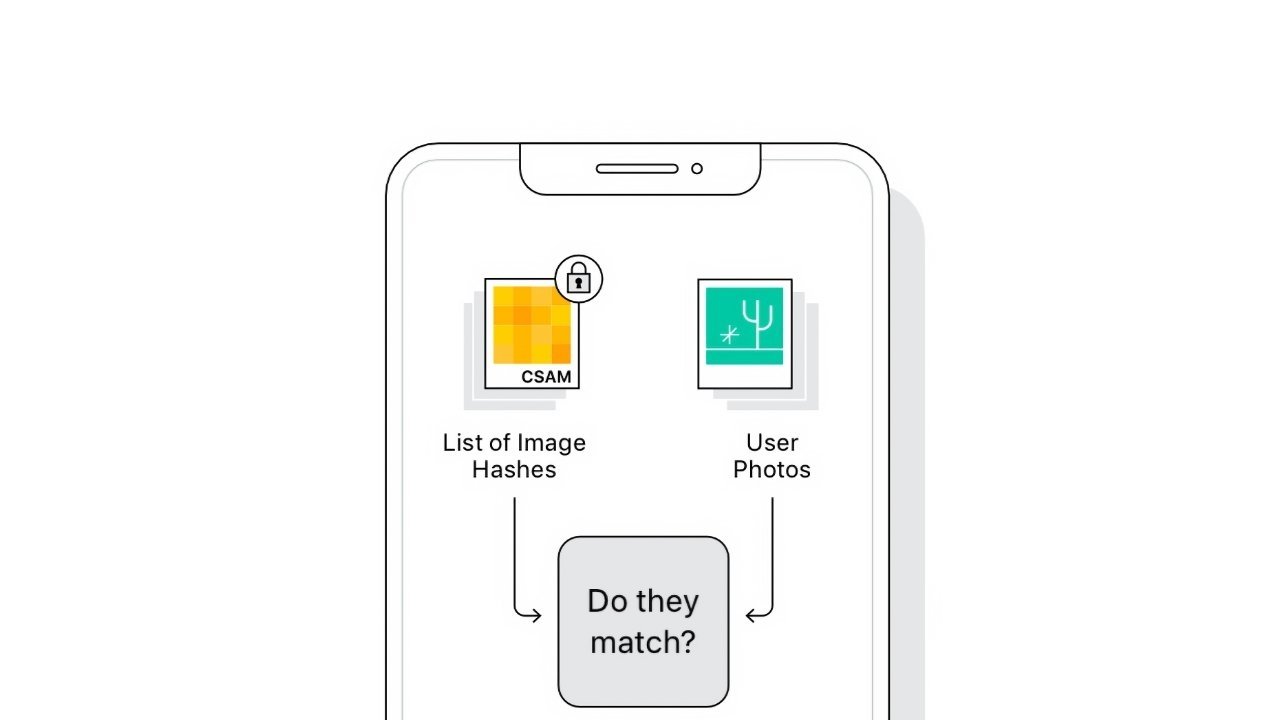

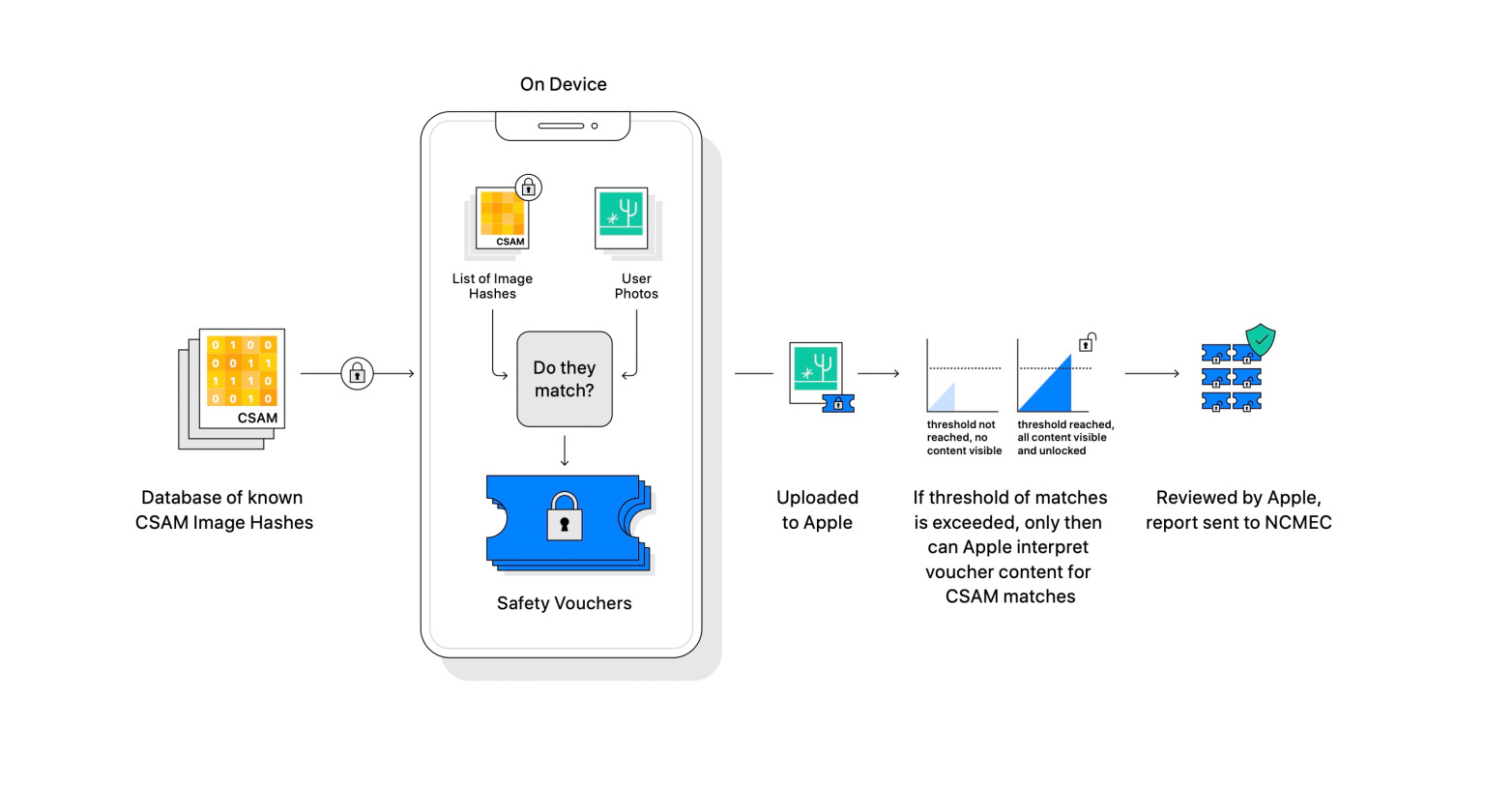

Csam detection. Apple has been building the CSAM detection algorithm for years so it is safe to assume some version of the code exists for testing. It works by comparing the photos users upload on iCloud against a known CSAM database maintained by the National Center for Missing Exploited Children NCMEC and other child safety organizations. Only the matches to known CSAM pictures.

CSAM Detection enables Apple to accurately identify and report iCloud users who store known Child Sexual Abuse Material CSAM in their iCloud Photos accounts. CSAM detection feature will be coming to iPhone in the Fall with iOS 15 and other updates to prevent the spread of child pornography. Apples CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC.

By moving the process on device Apples method is. Theres a team within Apple I have no. Apples method of detecting known CSAM is designed with user privacy in mind.

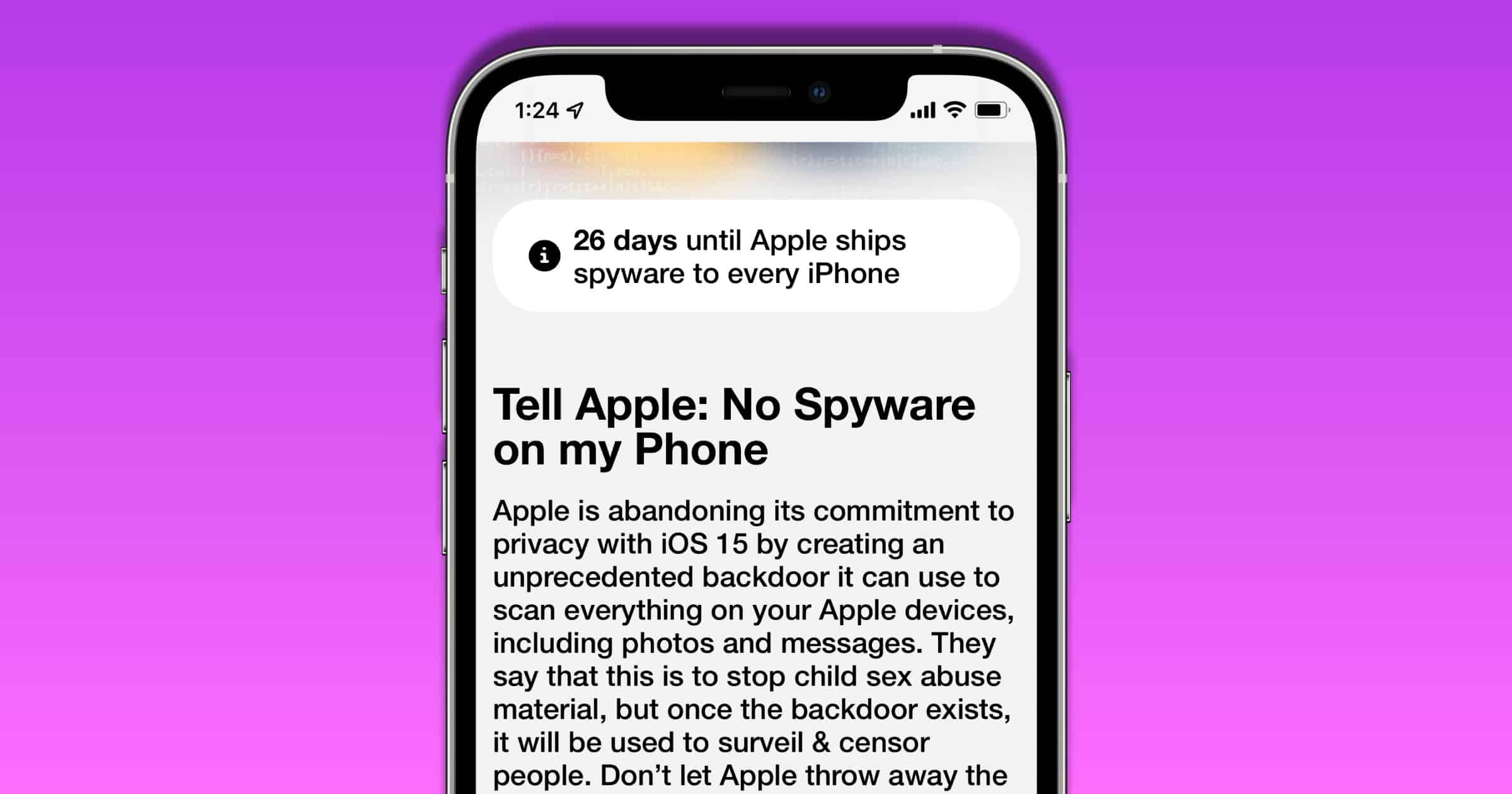

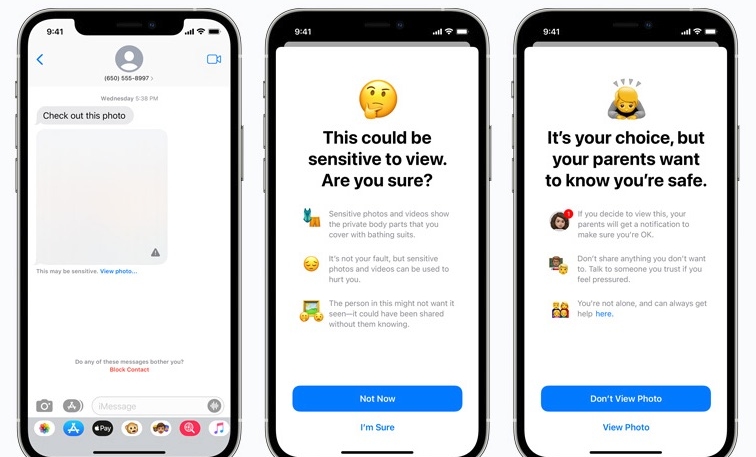

These features are intended to help protect children online and curb the spread of child sexual abuse material CSAM. On September 3 Apple announced it would be delaying CSAM detection which was meant to roll out later this fall to collect input and make improvements before releasing these critically. CSAM detection is a technology that finds such content by matching it against a known CSAM database.

Apple revealed the technology as part of an upcoming software update. The usual way to detect CSAM is when cloud services like Google Photos scan uploaded photos and compare them against a database of known CSAM images. Taken from the white paper from Apple on CSAM detection.

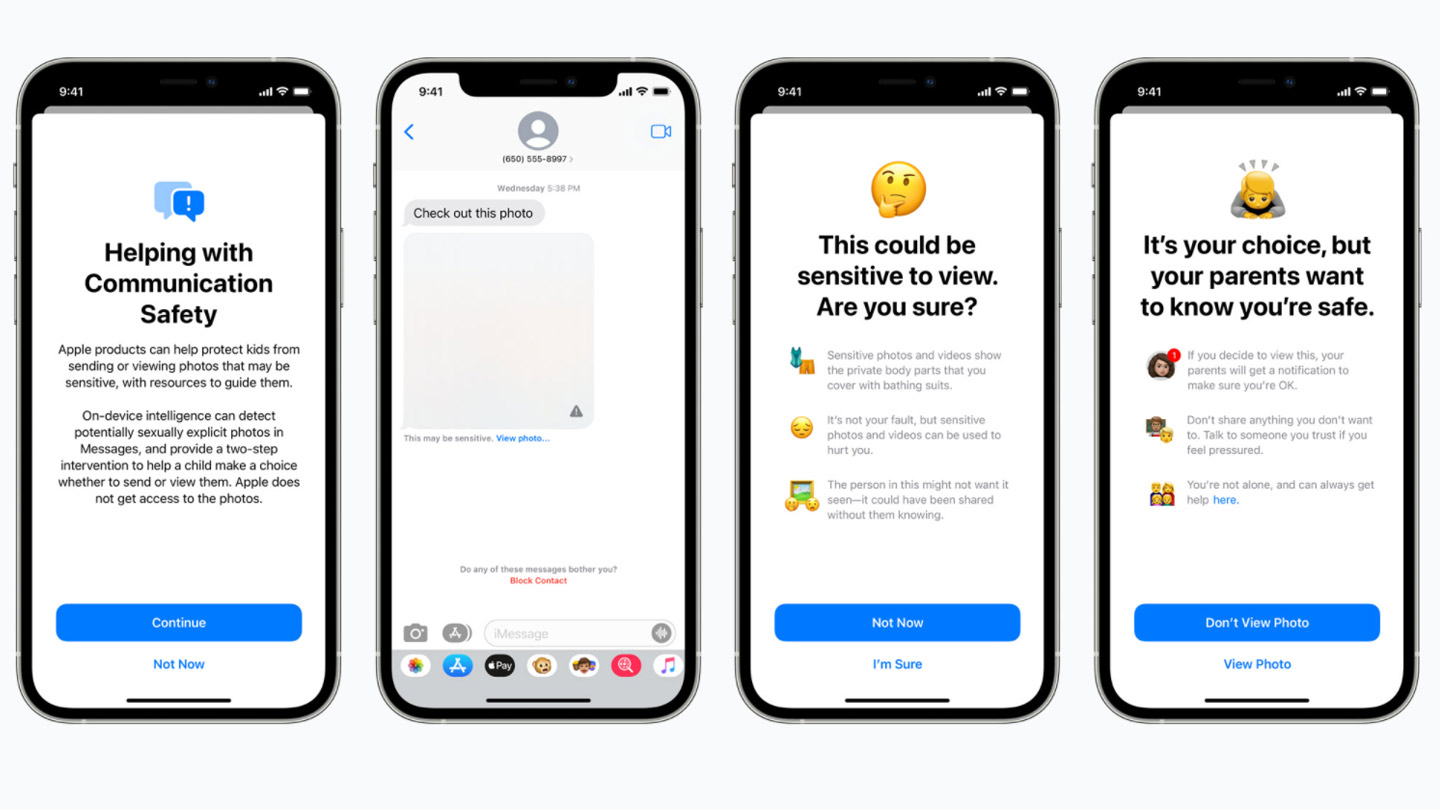

Using a NeuralHash system users iCloud Photos will be. Apples method of detecting known CSAM is designed with user privacy in mind. The new features will scan messages to alert parents and children that an image could potentially be CSAM expand Siri and Search to provide resources and support in unsafe situations and detect CSAM images stored in iCloud.

Falls ein Nutzer die Fotosynchronisierung in den Einstellungen deaktiviert funktioniert die CSAM-Erkennung nicht mehr. The state of the art of detecting CSAM pictures is not public. Obviously this raises a concern about the people who have to look at this stuff.

By the time a CSAM hash detection makes it out of the automated system it should have a high degree of accuracy but still nothing happens until humans look at it and verify that it is in fact CSAM material. The visual derivative summarizes the picture so that law enforcement can tell whether the match is correct. Finally updates to Siri and Search provide parents and children expanded information and help if they encounter unsafe situations.

CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos. Apples CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC. CSAM Detection funktioniert nur in Verbindung mit iCloud Photos dem Teil des iCloud-Dienstes der Fotos von einem Smartphone oder Tablet auf die Apple-Server hochlädt.

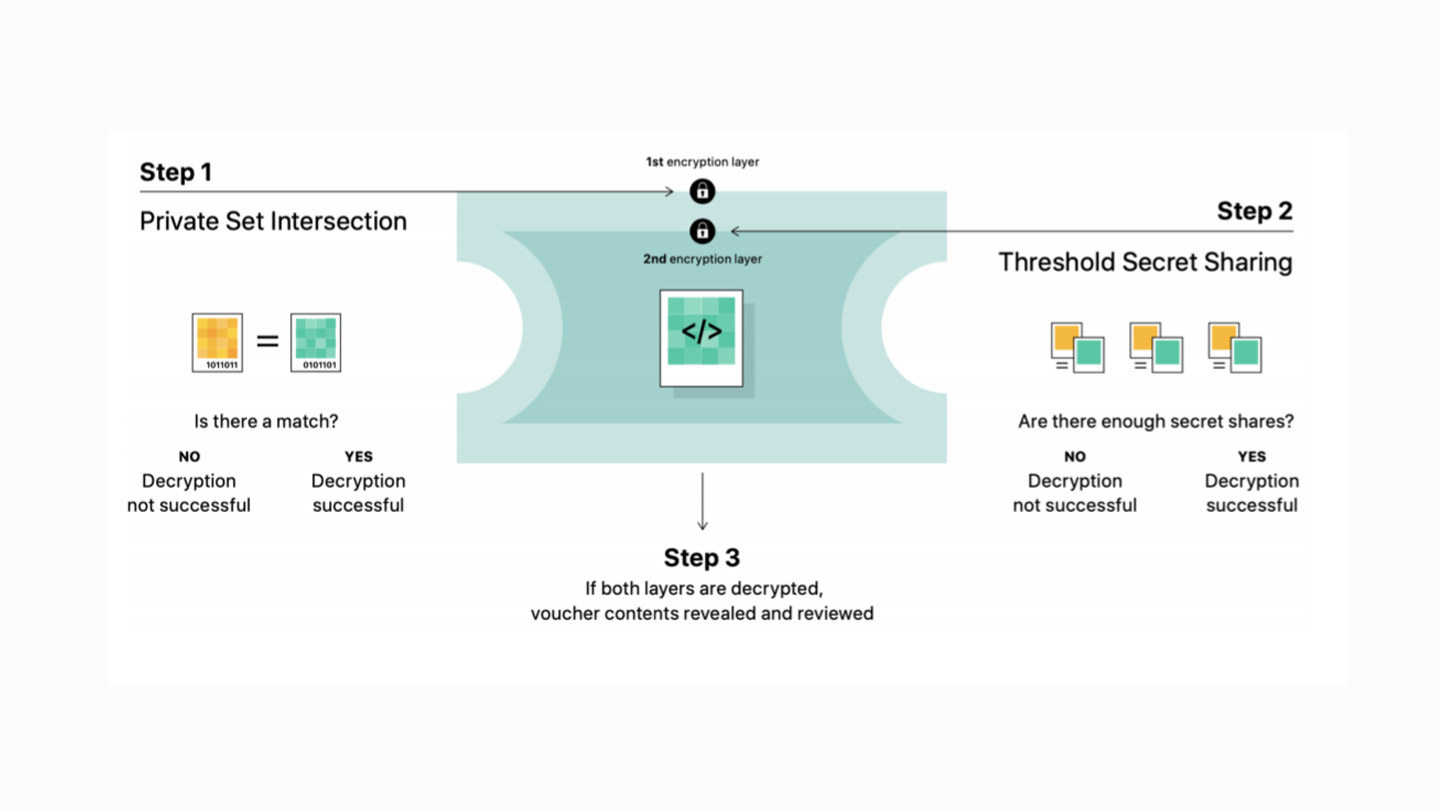

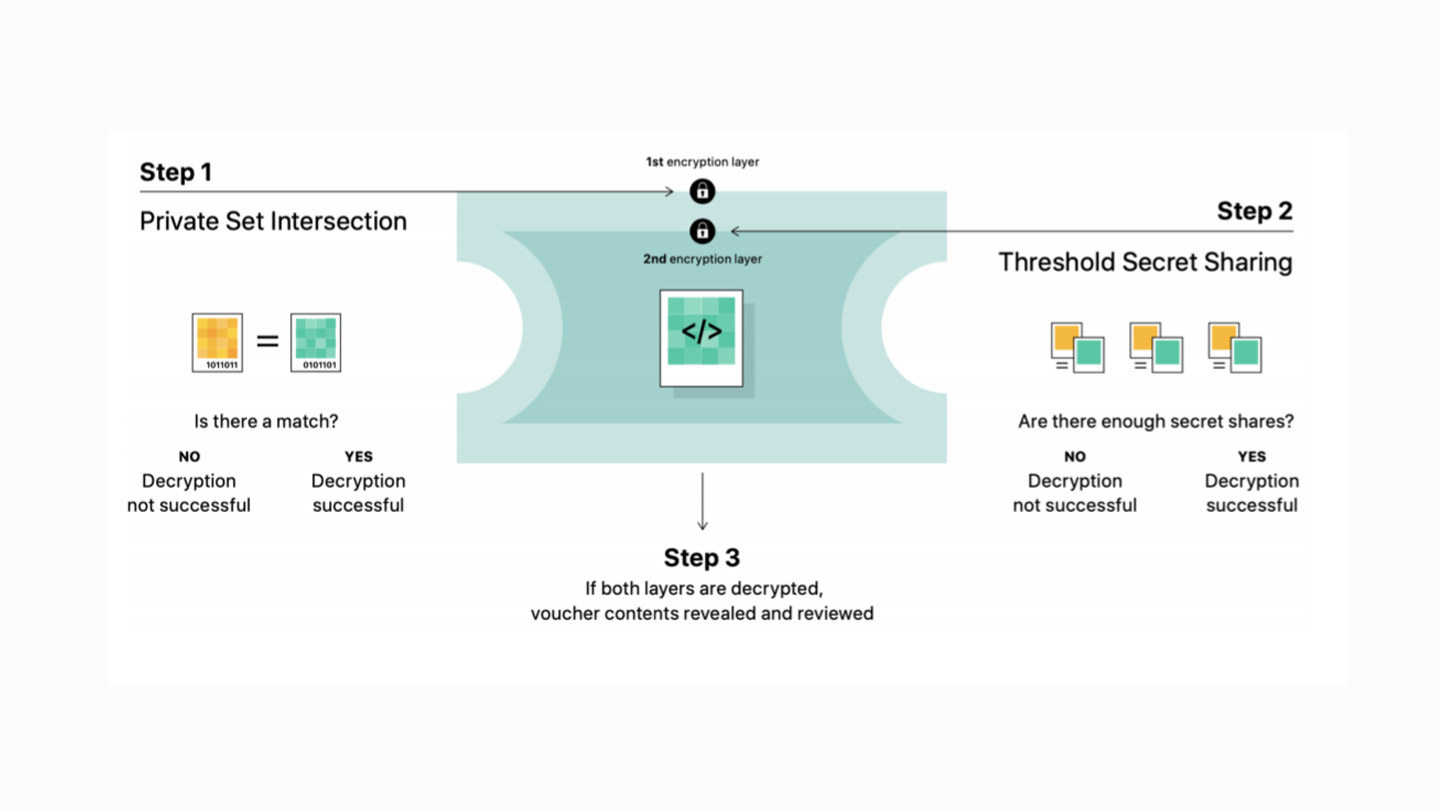

Apples implementation of the CSAM detection does not require that Apple servers scan every photo. Er macht sie auch auf den anderen Geräten des Nutzers zugänglich. The server can only decrypt if there are sucient CSAM matches.

Apple servers flag accounts exceeding a threshold number of images that match a known database of CSAM image hashes so that Apple can provide relevant information to the. Instead of scanning images in the cloud the system performs on-device matching using a database of known CSAM. Likely impact of the technology.

Apple has delayed plans to roll out its child sexual abuse CSAM detection technology that it chaotically announced last month citing feedback from customers. 614 AM PDT September 3 2021. Instead of scanning images in the cloud the system performs on-device matching using a database of known CSAM.

For Apple analyzing potentially harmful material is not new. Early last month Apple announced it would introduce a new set of tools to help detect known child sexual abuse material CSAM in photos stored. A developer claims to have reverse-engineered the NeuralHash algorithm used in Apples CSAM detectionConflicting views have been expressed.

In the above image the threshold is set at 3 hence only after the threshold is crossed the.

Apple Csam Detection Failsafe System Explained Slashgear

Apple S Csam Detection Reverse Engineered Claims Developer 9to5mac

Apple Details The Ways Its Csam Detection System Is Designed To Prevent Misuse 9to5mac

Apple S Csam Detection Tech Is Under Fire Again Techcrunch

Apple Plans To Use Csam Detection To Monitor Users Kaspersky Official Blog

Apple Csam Detection Failsafe System Explained Slashgear

Apple Csam Detection Failsafe System Explained Slashgear

Great Video Explaining How Apple S Neuralhash Csam Detection Works How To Evade It And How A Potential Flaw Can Let Apple And Others Misuse It R Apple

Researchers Say They Built A Csam Detection System Like Apple S And Discovered Flaws Engadget

Outdated Apple Csam Detection Algorithm Harvested From Ios 14 3 U Appleinsider

Tell Apple You Oppose Ios 15 Csam Detection With This Petition The Mac Observer

Apple Outlines Security And Privacy Of Csam Detection System In New Document Macrumors

Apple Delays Plans To Roll Out Csam Detection In Ios 15 After Privacy Backlash Techcrunch

Apple Confirms Csam Detection Only Applies To Photos Defends Its Method Against Other Solutions 9to5mac

Apple Csam Detection How To Stop It From Scanning Your Iphone Ipad Photos Tech Times

Patch Friday On Twitter Apple Csam Detection Pros Https T Co Viw0j2tk7n

Apple Confirms Child Sexual Abuse Material Csam Detection Doesn T Work When Icloud Photos Is Disabled