Csam Apple : Csam Scanning Im Iphone Corellium Will Apple Auf Die Finger Schauen Heise Online

Crucially Apple repeatedly stated that its claims about its CSAM-scanning software are subject to code inspection by security researchers like all other iOS device-side security claims And its senior veep of software engineering Craig Federighi went on the record to say security researchers are constantly able to inspect whats happening in Apples phone software. Apples CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups.

Apple S Csam Prevention Features Are A Privacy Disaster In The Making

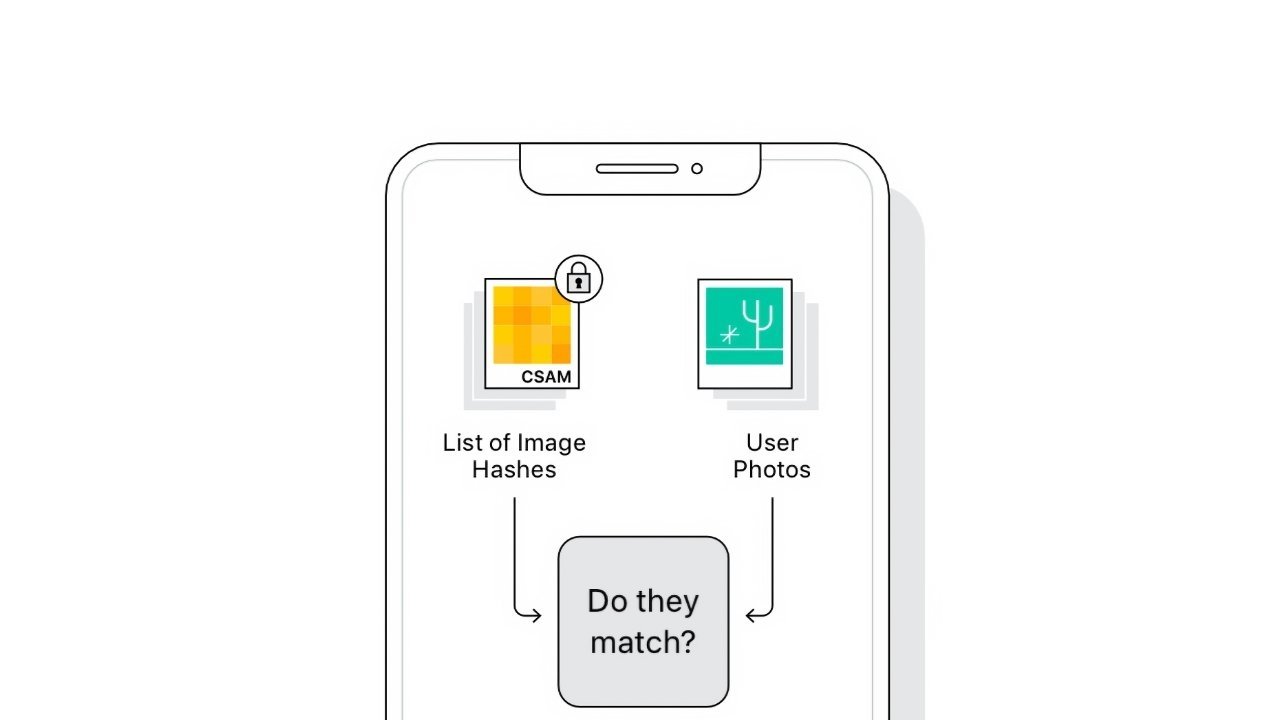

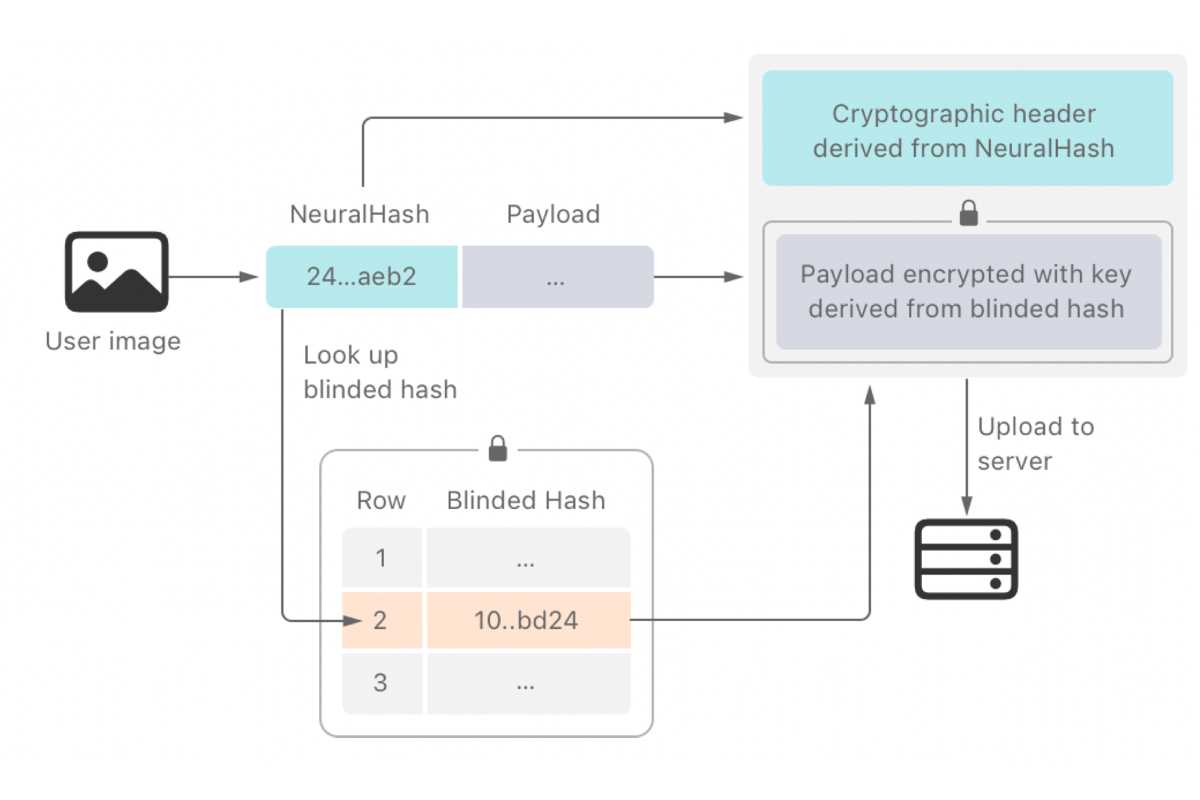

Apple said its new CSAM detection technology NeuralHash instead works on a users device and can identify if a user uploads known child abuse imagery to iCloud without decrypting the.

Csam apple. Apple would have deployed to everyones phone a CSAM-scanning feature that governments could and would subvert into a surveillance tool to make Apple. CSAM refers to content that depicts sexually explicit activities involving a child. So by definition I cant see how a series of photos could all match 1 or even a few CSAM images.

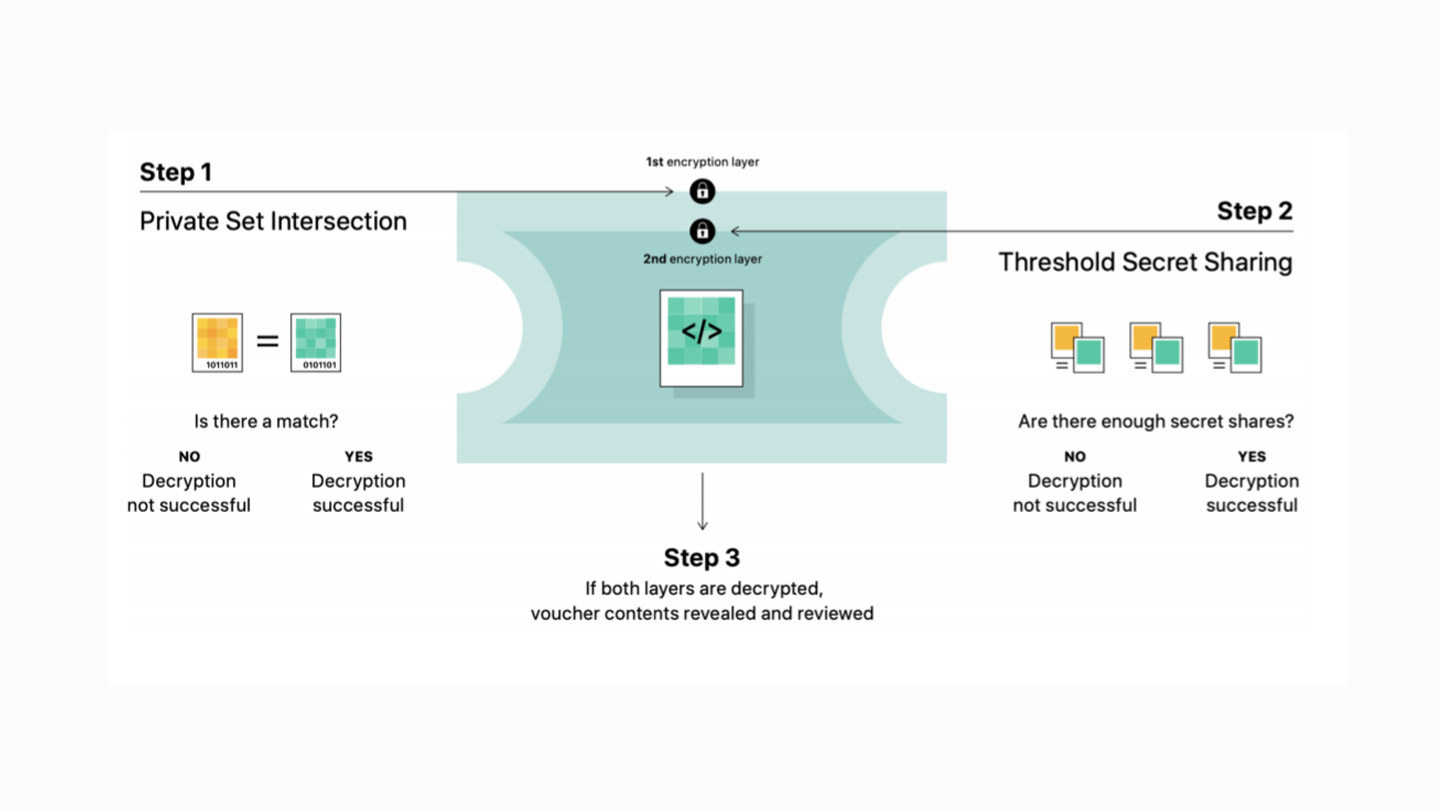

614 AM PDT September 3 2021. The CSAM detection relies on a. Governments were already.

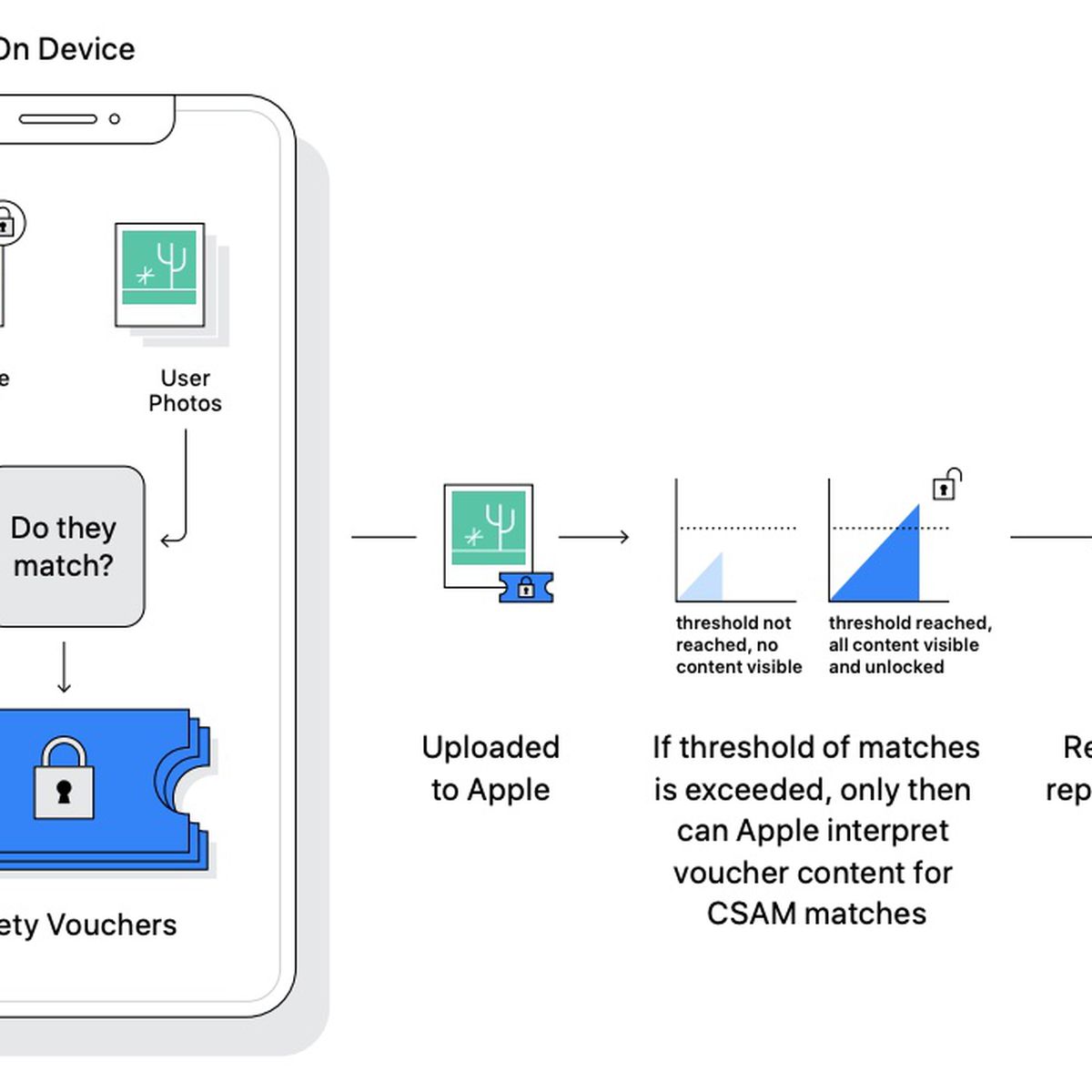

This will enable Apple to report these instances to the National Center for Missing and Exploited Children NCMEC. The set of image hashes used for matching are from known existing images of CSAM and only contains entries that were independently submitted by two or more child safety organizations operating in separate sovereign jurisdictions. Apple has delayed plans to roll out its child sexual abuse CSAM detection technology that it chaotically announced last month citing feedback from customers.

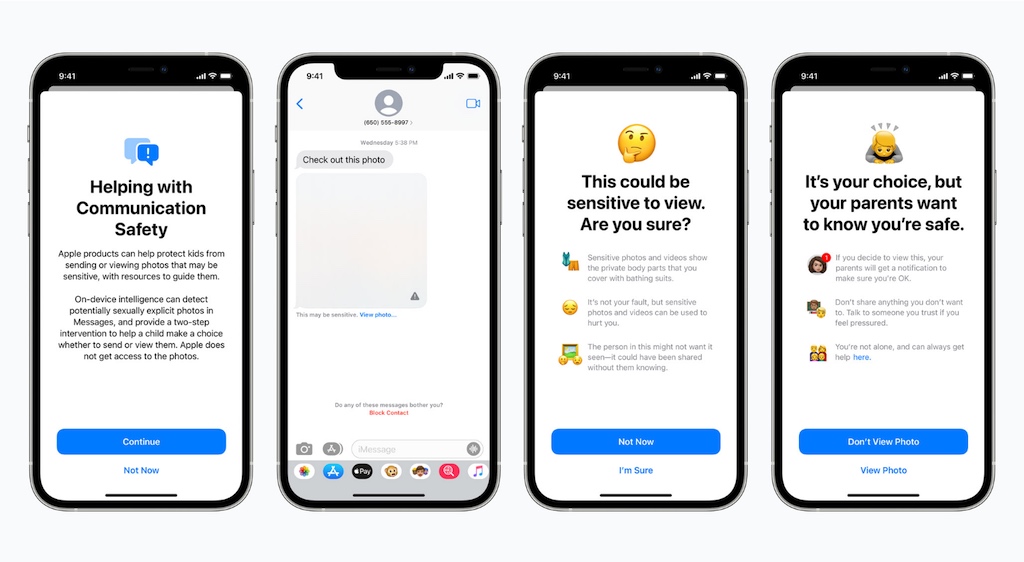

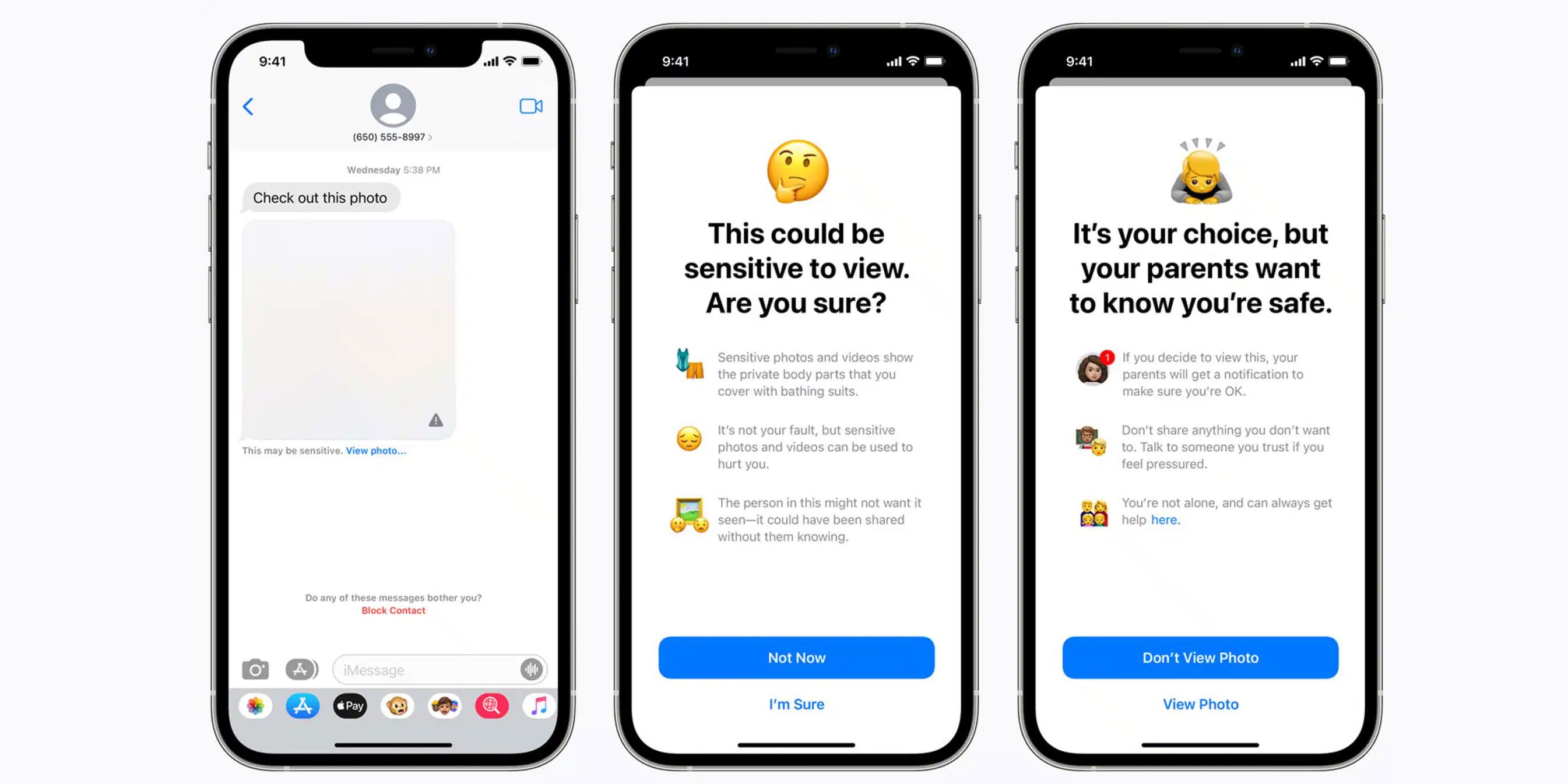

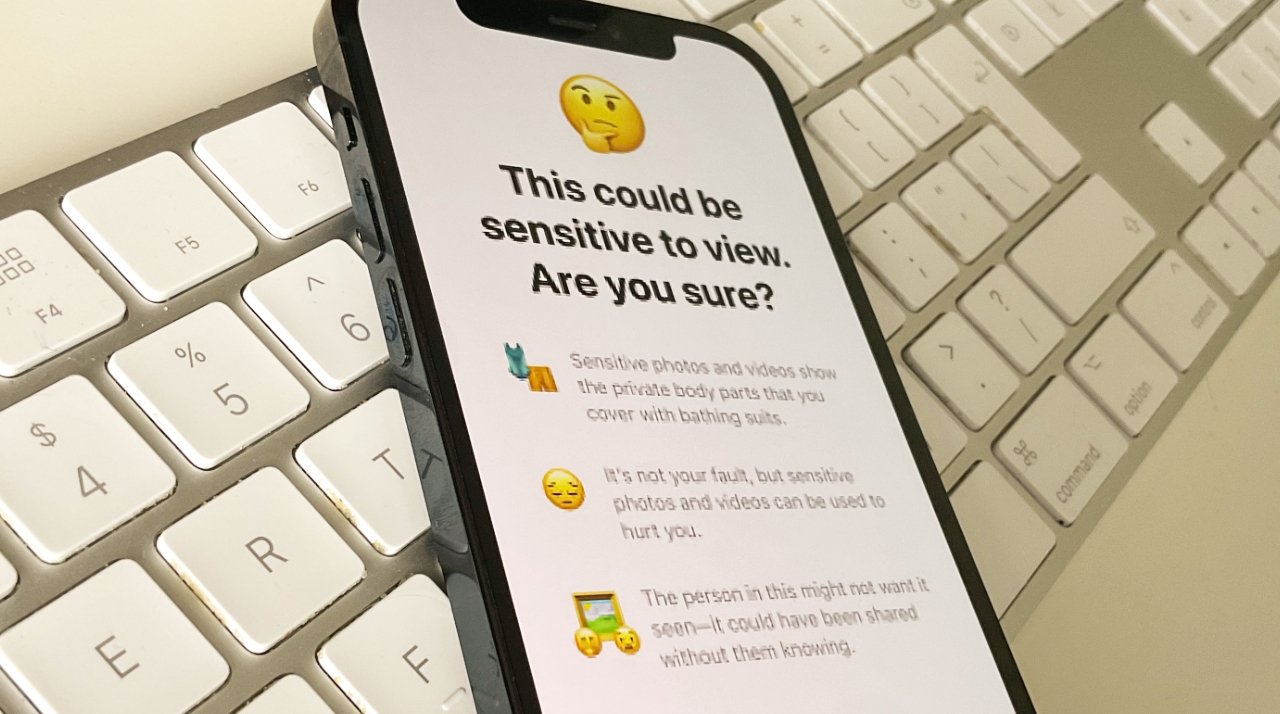

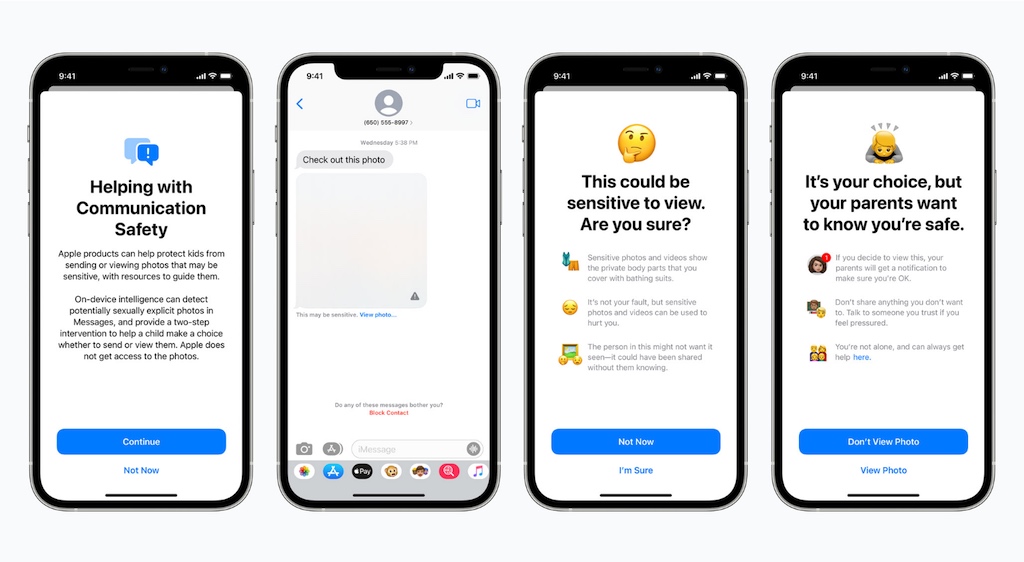

Apple would refuse such demands and our system has been designed to prevent that from happening. Apple does not add to the set of known CSAM. A recent study from Thorn reports that Self-generated child sexual abuse material SG-CSAM is a rapidly growing area of child sexual abuse material CSAM in circulation online and being consumed by communities of abusers Apples new Expanded Protections for Children aims to slow the growth of this practice.

Apple is far from the only company that scans photos to look for CSAM. Most users are unlikely to to have CSAM on their phone and Apple claims only around 1 in 1 trillion accounts could be incorrectly flagged. The sharing of nudes between romantic partners often spread throughout a peer group and can end up online in cases of revenge porn.

Apple has claimed it believes the new CSAM tech is way better and more private than what other companies have implemented on their servers as the scanning will be completely done locally on the iPhone and not on the server. Apple made an announcement in early August 2021 about its own plans to begin scanning for CSAM. Apple argues its system is actually more secure.

The biggest concern raised when Apple said it would scan iPhones for child sexual abuse materials CSAM is that there would be spec-creep with governments insisting the. The CSAM system does not actually scan the pictures on a users iCloud account. Apples system also relies on client-side scanning and local on-device intelligence.

Apple says its CSAM scan code can be verified by researchers. Apples CSAM detection capability is built solely to detect known CSAM images stored in iCloud Photos that have been identified by experts at NCMEC and other child safety groups. In other words.

Could governments force Apple to add non-CSAM images to the hash list. Apple hat die CSAM-Erkennung zusammen mit mehreren anderen Funktionen eingeführt die die elterliche Kontrolle auf mobilen Apple-Geräten erweitern. Apple has encountered monumental backlash to a new child sexual abuse material CSAM detection technology it announced earlier this month.

Apple has also stated the implementations done by other companies require every single photo stored by a user on a server to be scanned while the majority of them arent. Apple has published a FAQ titled Expanded Protections for Children which aims to allay users privacy concerns about the new CSAM detection in. So erhalten Eltern beispielsweise eine Benachrichtigung falls jemand ihrem Kind ein sexuell eindeutiges Foto über das Apple-Nachrichtensystem schickt.

Apples plans to scan users iCloud Photos library against a database of child sexual abuse material CSAM to look for matches and childrens messages for explicit content has come under fire. The system which Apple. The set of image hashes used for matching are from known existing images of CSAM.

Instead it relies solely on matching the hashes of pictures stored in iCloud to known CSAM hashes provided by. NCMEC acts as a comprehensive reporting center for CSAM and works in. To help address this new technology in iOS and iPadOS will allow Apple to detect known CSAM images stored in iCloud Photos.

In a stunning new post Edward Snowden has delved into Apples CSAM child sexual abuse material detection system coming to Apples approx 165BN. Apples approach to doing so however is unique. Theres a threshold for CSAM too a user needs to pass that threshold to even get flagged.

Apple has chosen to take a somewhat different approach which it. Introduced as a multi-pronged effort to securely monitor user content for offending material Apples CSAM plan involved in-line image detection tools to protect children using Messages safety.

Is Apple S Controversial Csam Scanning A Precursor To Something That Big Brother Will Hate Isecurityguru

Apple To Postpone Controversial Csam Rollout Arn

Csam Scanning Controversy Was Entirely Predictable Apple 9to5mac

Burgerrechtler Apple Soll Kinderporno Scan Aufgeben Macwelt

Outdated Apple Csam Detection Algorithm Harvested From Ios 14 3 U Appleinsider

Eff Apple Soll Csam Vorhaben Vollig Einstellen

Apple Plant Den Einsatz Der Csam Erkennung Zur Uberwachung Von Nutzern Offizieller Blog Von Kaspersky

Ios 15 Kindersicherheitsfunktionen Emporung Wachst Uber Das Scannen Von Fotos Auf Dem Gerat Germanic Nachrichten

Apple Addresses Csam Detection Concerns Will Consider Expanding System On Per Country Basis Macrumors

Apple Delays Controversial Icloud Photo Csam Scanning Macworld

Apple Eu Slammed For Dangerous Child Abuse Imagery Scanning Plans Appleinsider

Apple Csam Detection Failsafe System Explained Slashgear

Apple Plant Den Einsatz Der Csam Erkennung Zur Uberwachung Von Nutzern Offizieller Blog Von Kaspersky

Csam Erkennung Auf Dem Iphone Apple Halt Lokalen Kinderporno Scanner Fur Sicher Heise Online

Apple Reagiert Auf Edward Snowden Und Eff Kritik Zur Csam Erkennung Smartphones24

Die Csam Erkennung Von Apple Ist Ineffektiv Und Invasiv Warnen Cybersicherheitsforscher De Atsit

Apple S Csam Detection Tech Is Under Fire Again Techcrunch

Csam Scanning Im Iphone Corellium Will Apple Auf Die Finger Schauen Heise Online

Icloud Fotos Scan Apple Geht Auf Die Bedenken Bezuglich Der Csam Erkennung Ein Macerkopf